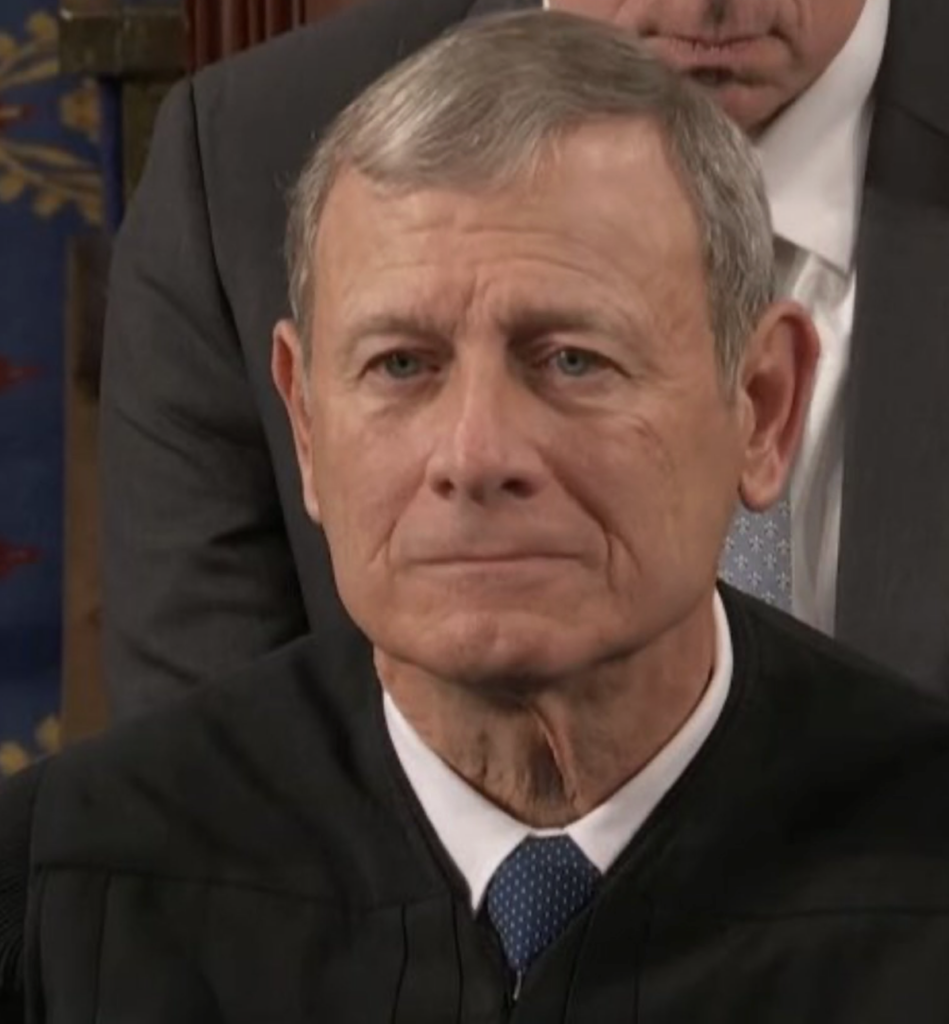

Hannah Arendt, in the year the Nazis came to power

From Eichmann in Jerusalem:

Now and then, the comedy breaks into the horror itself, and results in stories, presumably true

enough, whose macabre humor easily surpasses that of any Surrealist invention. Such was the

story told by Eichmann during the police examination about the unlucky Kommerzialrat Storfer of

Vienna, one of the representatives of the Jewish community. Eichmann had received a telegram

from Rudolf Höss, Commandant of Auschwitz, telling him that Storfer had arrived and hadurgently requested to see Eichmann. “I said to myself: O.K., this man has always behaved well,that is worth my while . . . I’ll go there myself and see what is the matter with him. And I go toEbner [chief of the Gestapo in Vienna], and Ebner says – I remember it only vaguely – If only hehad not been so clumsy; he went into hiding and tried to escape,’ something of the sort. And thepolice arrested him and sent him to the concentration camp, and, according to the orders of the Reichsführer (Himmler], no one could get out once he was in. Nothing could be done, neither Dr.Ebner nor I nor anybody else could do anything about it. I went to Auschwitz and asked Höss tosee Storfer.Yes, yes [Höss said], he is in one of the labor gangs.' With Storfer afterward, well, it was normal and human, we had a normal, human encounter. He told me all his grief and sorrow: I said:Well, my dear old friend [Ja, mein lieber guter Storfer], we certainly got it! What rotten luck!’

And I also said:Look, I really cannot help you, because according to orders from the Reichsführer nobody can get out. I can't get you out. Dr. Ebner can't get you out. I hear you made a mistake, that you went into hiding or wanted to bolt, which, after all, you did not need to do.' [Eichmann meant that Storfer, as a Jewish functionary, had immunity from deportation.] I forget what his reply to this was. And then I asked him how he was. And he said, yes, he wondered if he couldn't be let off work, it was heavy work. And then I said to Höss: 'Work-Storfer won't have to work!' But Höss said:Everyone works here.’ So I said: ‘O.K.,’ I said,I'll make out a chit to the effect that Storfer has to keep the gravel paths in order with a broom,' there were little gravel paths there,and that he has the right to sit down with his broom on one of the benches.’ [To Storfer] I said: `Will that be all right, Mr. Storfer? Will that suit you?’ Whereupon he was very pleased, and we shook hands, and then he was given the broom and sat down on his bench. It was a great inner joy to me that I could at least see the man with whom I had worked for so manylong years, and that we could speak with each other.” Six weeks after this normal human encounter, Storfer was dead – not gassed, apparently, but shot.

Is this a textbook case of bad faith, of lying self-deception combined with outrageous stupidity? Or is it simply the case of the eternally unrepentant criminal (Dostoevski once mentions in his diaries that in Siberia, among scores of murderers, rapists, and burglars, he never met a single man whowould admit that he had done wrong) who cannot afford to face reality because his crime hasbecome part and parcel of it? Yet Eichmann’s case is different from that of the ordinary criminal, who can shield himself effectively against the reality of a non-criminal world only within the narrow limits of his gang. Eichmann needed only to recall the past in order to feel assured that he was not lying and that he was not deceiving himself, for he and the world he lived in had once been inperfect harmony. And that German society of eighty million people had been shielded against reality and factuality by exactly the same means, the same self-deception, lies, and stupidity that had now become ingrained in Eichmann’s mentality. These lies changed from year to year, and they frequently contradicted each other; moreover, they were not necessarily the same for the various branches of the. Party hierarchy or the people at large. But the practice of self deception had become so common, almost a moral prerequisite for survival, that even now, eighteen years after the collapse of the Nazi regime, when most of the specific content of its lies has been forgotten, it is sometimes difficult not to believe that mendacity has become an integral part of the German national character. During the war, the lie most effective with the whole of the German people was the slogan of “the battle of destiny for the German people” [der Schicksalskampf des deutschen Volkes], coined either by Hitler or by Goebbels, which made self-deception easier on three counts: it suggested, first, that the war was no war; second, that it was started by destiny and not by Germany; and, third, that it’ was a matter of life and death for the Germans, who must annihilate their enemies or be annihilated. Eichmann’s astounding willingness, in Argentina as well as in, Jerusalem, to admit his crimes was due less to his own criminal capacity for self-deception than to the aura of systematic mendacity that had constituted the general, and generally accepted, atmosphere of the Third Reich.

***

From The Triumph of Stupidity:

In the first quarter of the 21st century, it is still easy for us to forget that the idea of universal basic education – the commitment to the idea that the average person should, at a minimum, be able to read and write – remains a practically a brand new concept in historical terms.

That, perhaps, is one reason why the social ubiquity of stupidity remains relatively unappreciated and under-analyzed, although it would seem that the Trumpist era is rapidly doing its best to remedy this situation. Indeed, Dietrich Bonhoeffer’s brief essay on the subject, now more than 80 years old, remains one of the few notable exceptions to this rule. Bonhoeffer was murdered by the Nazis in the year before Donald Trump’s birth, but at this historical moment his words resonate perhaps more powerfully than ever:

“In conversation with him, one virtually feels that one is dealing not at all with a person, but with slogans, catchwords and the like that have taken possession of him. He is under a spell, blinded, misused, and abused in his very being. Having thus become a mindless tool, the stupid person will also be capable of any evil and at the same time incapable of seeing that it is evil. This is where the danger of diabolical misuse lurks, for it is this that can once and for all destroy human beings.”

This is, given Bonhoeffer’s fate, eerily similar to the conclusion Hannah Arendt reaches about one of the chief architects of the Nazi mass murder, in her book Eichmann in Jerusalem. Adolf Eichmann’s crime, per Arendt, is at its core “the inability to think.” Eichmann is guilty of genocide and stupidity; or more precisely he is guilty of genocide because of his stupidity. His malevolence is a product of his stupidity, and vice versa. History may well reach a similar judgment about the man who is even now leading America into catastrophe.

In a prescient essay entitled “The Triumph of Stupidity,” published in the year the Nazis came to power in Germany, the philosopher Bertrand Russell noted:

“Given a few years of Nazi rule, Germany will sink to the level of a horde of Goths. What has happened? What has happened is quite simple. Those elements of the population which are both brutal and stupid (and these two qualities usually go together) have combined against the rest. . . . The fundamental cause of the trouble is that in the modern world the stupid are cocksure while the intelligent are full of doubt.”

Russell’s thoughts – now more than 90 years old – on the contrast between the intellectuals of the 18th and 19th centuries and those of his day, are quite gloomy. It is, he says, true that the most able thinkers of his day have a more intellectually sophisticated and more accurate outlook than their predecessors. Yet Enlightenment and Victorian thinkers had influence on public affairs, while today’s most gifted intellectuals “are impotent spectators.”

He suggests that “if intelligence is to be effective, it will have to be combined with a moral fervor which it usually possessed in the past but now usually lacks.”

The post The aura of systematic mendacity appeared first on Lawyers, Guns & Money.